Chat Control: How the EU will exploit children for power

This article raises a couple of compelling arguments that have not been discussed elsewhere. Scroll to the bottom for a summary/TL;DR of issues, and to find out how to efficiently contact your local representative with your thoughts.

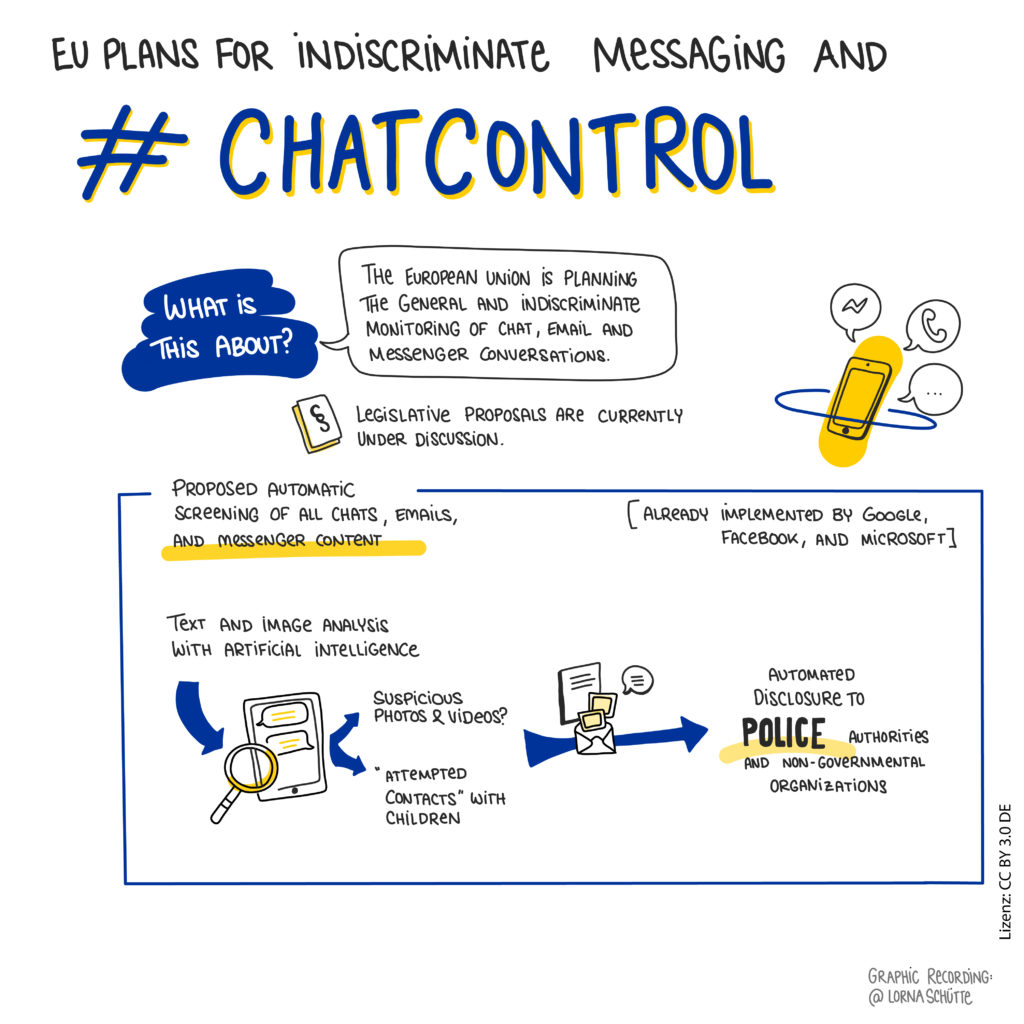

Back in 2022, the European Commissioner for Home Affairs proposed a Regulation to Prevent and Combat Child Sexual Abuse. One of the key purposes of this proposal was to bug the messengers of the entire EU public with government-approved spyware. Known as 'Chat Control' colloquially, the regulation failed to pass in 2024, but a new iteration of it is now back on the table.

How it would (probably) work

Each member state would be tasked with implementing their own mechanisms within their own legal framework, but according to documents released by the European Parliament, it would work roughly as follows:

- Apps used for communication, such as WhatsApp, Facebook Messenger, and Signal, would be required to install AI spyware.

- When a person sends images or videos, the spyware attempts to determine if they depict content that could be defined as 'CSAM'. Text is analyzed for signs of 'grooming'.

- If the AI detects something it deems suspicious, it will report the content and user data to the authorities.

The reality of criminalized images of minors, or 'CSAM'

Many critics of Chat Control are hesitant to challenge the prevailing narrative that criminalized images of minors depict horrific acts, and I don't blame them for being so afraid. The reality, however, is that while nightmarish images surely must exist, many cases of 'CSAM' are much more banal. One of the largest operations ever against criminalized depictions of children, Operation Spade, resulted in hundreds of arrests despite the videos being essentially naturist (containing no sexual activity whatsoever).

The vast majority of new 'content' in the 2020s is produced by minors themselves, sometimes - but not usually - by being tricked. This corresponds with a growing trend of sexual communications among teens, also known as 'sexting'.

The reality is far from the picture widely presented, of children being subjected to the most horrific abuse imaginable at the hands of sadistic pot-bellied ogres. Individuals and organizations fighting against Chat Control would do well to stop referring to criminalized material as 'CSAM', 'sexual violence against children', or the like; this misrepresentation triggers strong emotions that will ultimately encourage support for the new scheme. An otherwise excellent write-up by German civil rights group GFF suffered greatly from this unhelpful mischaracterization.

Popular arguments against Chat Control

There have already been a number of criticisms of the proposal. For example:

- It fundamentally undermines the end-to-end encryption technology that many well-intended people rely on for their privacy and security.

- It is a blatant violation of the EU Charter of Fundamental Rights, and of rulings made by the European Court of Justice.

- The proposal is part of a wider push to force backdoors on technology companies, under the ProtectEU internal security strategy.

- AI has shown an extreme tendency to incorrectly label images problematic; Swiss police reported an 80% false positive rate, meaning that most images reported as 'CSAM' are not illegal images of minors.

- Many innocent people will be flagged, their details permanently recorded as part of a 'child sexual abuse' investigation.

- Teens engaging in consensual 'sexting' with each other may be subject to criminal punishment.

An issue that has been ignored

What has apparently not been discussed, but is perhaps the most powerful argument against Chat Control, is that AI scanning of messenger apps would inevitably lead to private images of minors being leaked.

As discussed above, criminalized images of minors can be much less extreme than the public has been led to believe. A nude sent by one minor to another, likely provocatively posed, would absolutely qualify as 'CSAM'. It would almost certainly be flagged by AI and sent to the authorities, where a team of adults would review it. Even beach or bathtub photos, or medical images showing nudity, are also likely to be flagged and shared with a team of strangers.

One would hope that the adults reviewing the material could be trusted to behave professionally, as if having a stranger see your embarrassing photos and corresponding account details wasn't bad enough. That is unfortunately not the case. A brief perusal of news articles at any point in time reveals dozens of recent cases of law enforcement officials, including child protection workers and heads of child sex crime units, being arrested for child pornography and other child sex offenses. No matter how many assurances or technological solutions are promised by the proposal's architects, it is inevitable that some flagged images of young people will be leaked by those entrusted with 'reviewing' them. Rather than preventing the spread of images of sexual abuse - the supposed goal - the technology will ultimately leak private images of minors to the public, of the kind which those involved would absolutely not want strangers to view. Furthermore, should a particularly malicious officer like what he sees, he would have the requisite information to contact the young person as well as material with which to blackmail them.

Once the private images are leaked, they will be spread on darknet forums that are extremely hard to police, and they will never disappear. They could even then be spread via the very apps targeted by Chat Control, using encrypted archives such as those easily made with WinRAR or 7zip, which render content invisible to the spyware. The usage that the regulation ostensibly aims to prevent will therefore continue, and in a manner that is even harder to detect.

New problems are created, while existing problems are not solved.

The end game

If you have now come to the conclusion that 'Chat Control' is a bad idea, you are both right and wrong. It is an absolutely terrible idea from a child protection standpoint. However, it is an excellent idea if you're looking to open the door to wider surveillance in the future. By ostensibly targeting only criminalized images of minors, and nudging people to imagine them in their most extreme and offensive form, the Chat Control proposal acts as a trojan horse for mass surveillance of private individuals on an even larger and more intrusive scale. Other observers have pointed out how Chat Control fits into the ProtectEU scheme, one which aims to provide authorities with 'lawful' access to all encrypted data by 2030. Breaking encryption mathematically within this time frame is basically impossible, so the only options remaining would be forced backdoors or the requirement to provide passwords to law enforcement upon demand. VeraCrypt (and others) offer a solution to the disclosure requirement, so one would assume the intention is to mandate a backdoor.

Forcing backdoors upon distributors of encryption software would be one solution, but this would apply solely to those living or selling software in the EU, which would not capture free programs like VeraCrypt. Therefore, the only effective method left for the EU would be a requirement for backdoors to be placed within major operating systems distributed commercially within its borders, such as Windows, Mac, Android, and iOS. The EU market is sufficiently large that Microsoft, Apple and Google would be hesitant not to comply. All that remained would be to convince the public that the intrusion was reasonable and necessary. And with a precedent in place - Chat Control - a little more scanning might not feel like such a giant leap. Incrementally, EU governments could force tech giants to monitor everything a person does on their devices, at first 'to detect CSAM' (and potentially leaking private images that were not exploitive and never intended to be shared).

With the 'grooming detection' baked into the Chat Control proposals, scanning of text would already be legitimized, and extending this to scan for other 'problematic behavior' would be trivial. Before long, AI could be scanning devices for whatever each member state demanded, such as speech they decided was dangerous or subversive in any way. The fact that EU member states are 'democracies' means very little. We've already seen how rapidly the second Trump administration has turned the USA into a dystopic autocracy, giving ICE access to Medicaid data and allowing it to use foreign spyware to target perceived adversaries. Given the current state of global politics, it's only a matter of time before such leaders are elected in EU member states. The future looks extremely bleak should Chat Control be allowed to act as the trojan horse it is intended to be.

Summary / TL;DR

- The definition of 'CSAM' is far broader than many believe; nudist-type images qualify.

- Family pictures, medical images, and teens sexting will likely be flagged by AI.

- Images flagged by AI will be sent to the authorities. This will include nude images sent privately by teens to other teens, as well as other sensitive and embarrassing content.

- Authorities cannot be trusted to behave professionally; there are endless cases of law enforcement officers being arrested for child pornography and other child sex offenses.

- Images leaked by malicious 'reviewers' will be spread on the darknet, and encrypted archives will then be shared on messengers completely undetected.

- People wrongly flagged will still have their content and user details recorded as part of a 'child sexual abuse' investigation.

- The system proposed now is alleged to target only something most people abhor. This is a trojan horse for wider surveillance.

- Should a Trump-like government take control of an EU country, which is not unlikely given the current state of global politics, the spy tools will no doubt be used for highly nefarious purposes.

Want to express opposition? Please contact your MEP, as well as civil rights and media organizations, urging them to consider the very real risk posed to members of the public, including minors. Let them know that this proposal will leak sensitive photos to third parties who cannot be fully trusted, while not preventing the spread of criminalized images of minors by those who are motivated and technologically astute. See Fight Chat Control (unaffiliated) for an easy way to contact your MEP.